Recently I gave a talk at Mapping Festival in Geneva, as part of a roundtable entitled “Life Through the Eyes of Machines”, with Dan Williams and moderated by Nicolas Nova. [What follows is a very condensed version of my lecture. Click on any image to enlarge it.]

My talk was entitled “If a computer can’t find your face, do you not have one?”, which is a quote from an artist’s statement for the project A Machine Frame of Mind by Brooklyn Brown. Brown’s project looks at machine vision as a material to be designed, and posits “…that the machine-readable world is something we are both constructing and should continue to design for in order to demystify and expose advanced technological processes. Shifting perspectives allows for the discovery of other realities that offer control and enjoyment of how we are seen and understood by computation.”

So I opened the talk with existential questions, “how we are seen and understood”, and in particular about human faces and their importance within the subject of the machine’s gaze. I also threw in a quote from Walter Benjamin (gathered from a paper by Bernard Rhie, more on him later): “To experience the aura of a phenomenon means to invest it with the capability of returning the gaze.”

From Brown’s work I transition to discussing the outmoded pseudoscience of phrenology, wherein mapping regions on one’s face and head, and making detailed measurements, used to be seen as a way of understanding a person’s “true nature”. Phrenology is quaint and laughable, but the desire to afford so much importance to the appearance of the human head surfaces in other ways, such as our ongoing squeamishness regarding face transplants. Perhaps the taboo about face transplants is in part to do with the lack of instant results (often several operations are required, and the face must reform to the new structure underneath) but also the simple idea, despite the life-changing and positive results for the recipient, that it is as though the person who received the face is no longer them, is wearing a mask.

I was delighted to recently discover the work of American scholar Bernard Rhie, who writes extensively about the importance of the human face and particularly about the late writings of Wittgenstein regarding faces. I quoted from one of Rhie’s recent papers:

“…I suggest that by seeing the connections between aesthetic perception and the way we perceive faces, we can better appreciate the deeper stakes of ongoing theoretical disputes about the concept of aesthetic expression: especially debates about whether the expressive qualities of artworks are real or merely due to the projections of aesthetic beholders. What’s ultimately at stake in such disputes, I will suggest, is the proper acknowledgement (or denial) of the expressiveness of the human body. Philosophical debates about the expressivity of artworks, that is, serve as proxies for debates about the ontologies—and, in particular, the expressive nature—of human beings as such.”

Moving on from the face and its place in our social cultures, I discussed how reality will be sculpted by machine vision, making it easier for machines to navigate, and not necessarily people; and also the aesthetics of this newly-sculpted reality. Here I briefly touched on the “New Aesthetic“, which was described as such by Bruce Sterling: “The New Aesthetic concerns itself with “an eruption of the digital into the physical.” That eruption was inevitable. It’s been going on for a generation. It should be much better acculturated than it is.”

I also added that sometimes there is nothing for the machine to see or process, and what happens then? Dutch artist Sander Veenhof‘s Google Glass Screensaver demo video is a humourous answer to that question:

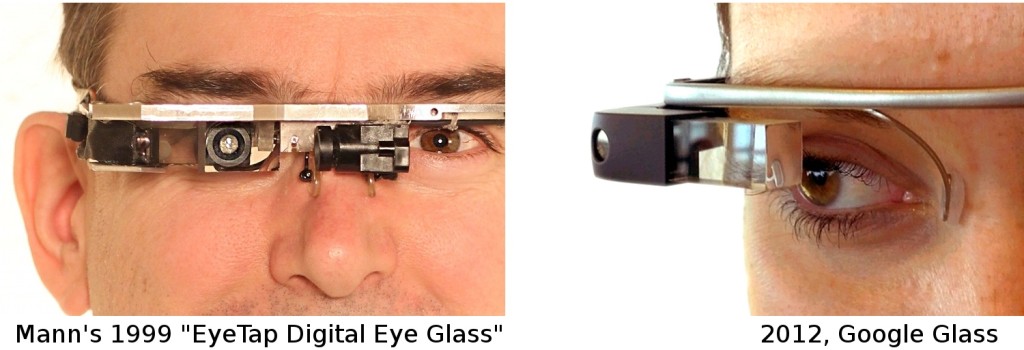

From the face and and the aesthetic of a machine readable world I moved on to security, sousveillance, and use of the (machine-read or human-collected) image as evidence. In this area there is no better place to start than with Canadian computer scientist Steve Mann, widely recognized as the father of wearable computing, and who has been wearing a device called the EyeTap (basically: a camera and small screen embedded in a wearable viewing apparatus) since the 1980s.

In a recent interview I conducted with him for Volume Magazine, I asked him about a recent incident wherein various perpetrators attempted to forcibly remove his EyeTap device in a McDonalds in Paris.

“In 2012, Mann was assaulted at a McDonalds in Paris, with his EyeTap forcibly removed, despite producing a doctor’s letter indicating that the EyeTap can only be removed with tools. McDonalds has issued a few statements regarding the incident, but denies that he was assaulted by any staff member. In response to this action, (which was documented with the EyeTap, of course), Mann christened the corporate backlash against sousveillance ‘McVeillance’. The ethics of sousveillance and how it is countered by McVeillance is an issue highlighting the massive power of corporations to prevent us from documenting our own existence. Mann avers that sousveillance has both moral positive and moral negative possibilities, so a categorical (i.e. Kantian) moral imperative against it (i.e. McVeillance) is necessarily a moral negative, and that in addition McVeillance is “illegal, as per human rights laws”.”

The integration of machine vision with the body, and the public discomfort with this (this is not the first time Mann has had his device forcibly removed), raises the questions of the legal grayzone these technologies inhabit — when watching or when watching back. Mann is in fact trying to draft legislation to address this, because the law is a slow beast and without prodding, there may never be any guidelines to counter McVeillance.

The whole incident at McDonalds was captured by Mann’s EyeTap, providing some damning evidence against the perps. The tonnage of images collected by Mann and those who will inevitably follow him (Mann is constantly streaming and transmitting) raises further issues about the possible nefarious uses of such images: one can easily imagine hired hackers destroying your database if they know you have images from a crimescene, for example. To solve this Mann suggests redundant storage and communities of trust, but even with that in place this will remain a sticky issue.

As seen in the image above, Mann’s pioneering work in its current stage looks eerily like what Google has developed and named Glass. If Google’s dreams come true and many of us are soon wearing a Glass, what are the right and wrong questions about this turn of events? The wrong questions, I would suggest, are how cool or uncool it will look, how much it costs, or whether saying “OK, Glass” to it to activate it will become tiresome.

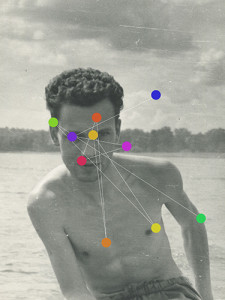

Better questions are: what will be our unwitting collaboration with industry? How will Google make $ from your daily life? An easy example is Google Glass tracking your eye movements over shop shelves — valuable data that can then be sold back to the shops so they can understand why the zit cream isn’t selling. Artist Janek Simon recently produced an open source system for tracking eye movements and exhibited the system as well as the resulting images at Zacheta Gallery in Warsaw. The images were lovely and indicated where people’s eyes lingered: some obvious places, some not so obvious. It’s easy to see how this is interesting information for several parties.

Raising this issue of the value of where the eye rests, what we see, and the “attention economy” gives rise to an interest in the possible “deletive reality” that machine vision poses — not unlike the concerns of the hacker eliminating your database of images when you record something that could hold someone criminally liable — we could simply choose to not see certain things in the first place, like the homeless person on the corner. In fact an art project that has already identified this is Artvertiser by Julian Oliver (dubbed “improved reality”), wherein users can hold up a viewing apparatus that allows them to view the city with art in the place of advertising billboards.

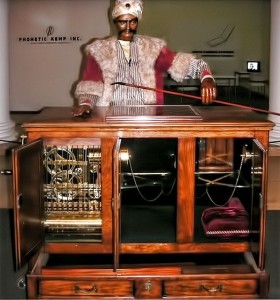

Then there are the very limits of the systems we create. Machines are our creations — they are limited by the imaginations and capacities of their creators. Humankind has long been fascinated by the possibility of creating machines that can do tasks as well as we can, or better. The Mechanical Turk is an example of an early automaton (1770, created by Wolfgang von Kempelen) which amazed viewers — a machine was able to beat humans at chess! Later it was revealed that the secret of the Turk’s success was a midget chess whiz hidden in the cabinet underneath, running the show.

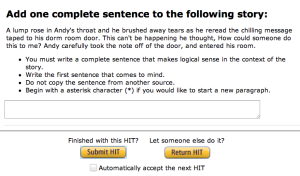

Many years later, Amazon, better known as an online bookseller, creates a system called Amazon Mechanical Turk. The system matches workers with tasks — or HITs, (Human Intelligence Tasks) in their parlance. The HITs are varied and sometimes strange, but have one thing in common: they are tasks that humans can do very easily, but computers would find difficult. For example, looking at a series of images and being able to sort them into pictures of dogs or pictures of cats. Amazon Mechanical Turk workers perform these tasks for pennies.

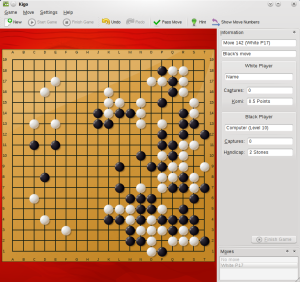

Another prominent example of the differences between machines and their human overlords is the way that computers have been able to finally truly overtake humans in the realm of chess — Kasparov vs. Deep Blue being the moment of truth. But despite the ability of machines to play chess, it has taken much longer (and many would argue it is still a long way off) that computers can reliably beat proficient humans at the game of Go, where the highest compliment is the ambiguous “good shape”.

We are left contemplating a world wherein (for now) anything built by humans can be foiled by humans. Reality will be increasingly sculpted to facilitate machine vision and other kinds of automation, therefore new methods of subverting these systems will also be invented (using creative uses of makeup as one method — see the CV dazzle project by Adam Harvey, which was discussed at length in Dan Williams’ talk). This reality-sculpting may look crude, because the systems we design are, for the time being, far cruder than we are. Tasks that require human intelligence to see to easy completion, ranging from playing a game of Go to identifying a photo of a cat on Amazon Mechanical Turk, will remain important for the near future. Etiquette on using your Google Glass in public remains an unsolved mystery, though your actions will become far less mysterious once analyzed (perhaps by Amazon Mechanical Turk workers).